A small python program for performing the Jury stability test. It is analogous to the Routh-Hurwitz program presented here, but for discrete rather than continuous time systems. As for the Routh-Hurwitz program, this script can accept algebraic expressions for the coefficients of the characteristic polynomial.

https://dl.dropbox.com/u/1614464/routhHurwitz/jury.py

Wednesday, December 5, 2012

Tuesday, December 4, 2012

A server side RSS aggregator, forked from Stephen Minutillo's "Feed on Feeds"

I started this project because I was looking for a simple alternative to google reader, that I could run on a cheap shared php host. Strangely enough, there wasn't a lot of choice; I initially considered TinyTinyRSS, but didn't really like it. I also came across Feed on Feeds (fof), by Steven Minutillo, which seemed to be exactly what I wanted - a lightweight and simple php feed aggregator.

Unfortunately, development on fof appears to have stalled (there have only been 5 or so code commits since 2009). Since the code base for the project was so small, I decided to fork it and add a few features/bugfixes. However, once I started looking at the code, it soon became apparent that the security of the software was severely lacking.

There were all sorts of nasties lurking in this code, which I've now fixed, including

This project was an excellent lesson in how difficult it can be to add security features to insecurely designed software. The only reason doing so was feasible in this case is that the code-base was so small! It was also a good excuse to learn about all sorts of different attacks and how to prevent them. Although it was pretty tedious hardening the code, it ended up being a pretty valuable experience.

For the future, I plan to add the following features

The forked code can be downloaded here:

https://dl.dropbox.com/u/1614464/fof/fof-1.0.1.tar.gz

In the absence of a proper change log, here's the subversion commit log (so you can see what has been changed):

https://dl.dropbox.com/u/1614464/fof/svn_log_1.0.1.txt

I really should move this project (as in the code hosting and bug tracking) onto some kind of hosted service (right now I'm doing all the bug-tracking etc on my local machine). Maybe I'll do that sometime in the future too.

Unfortunately, development on fof appears to have stalled (there have only been 5 or so code commits since 2009). Since the code base for the project was so small, I decided to fork it and add a few features/bugfixes. However, once I started looking at the code, it soon became apparent that the security of the software was severely lacking.

There were all sorts of nasties lurking in this code, which I've now fixed, including

- An arbitrary code execution vulnerability (user data passed directly to create_function)

- Numerous privilege checking problems (for example, any user could change another users password, or uninstall the software)

- A vulnerability allowing an attacker to read and write arbitrary data to the database

- a poorly designed and insecure login system (used the hash of the users password as a token)

- password hashes were stored unsalted

- logging system leaked data - logs were publicly viewable, and contained sensitive information (session ids, etc)

- no CSRF prevention

- two open redirect vulnerabilities

- about 30 XSS vulnerabilities

This project was an excellent lesson in how difficult it can be to add security features to insecurely designed software. The only reason doing so was feasible in this case is that the code-base was so small! It was also a good excuse to learn about all sorts of different attacks and how to prevent them. Although it was pretty tedious hardening the code, it ended up being a pretty valuable experience.

For the future, I plan to add the following features

- rewrite the database layer to use PDO (and hence support databases other than mysql), or else use some kind of ORM

- allow new users to register accounts

- add a RESTful api, and possibly also create a new user interface

- perform a lot of code clean up and refactoring. Optimise the javascript parts of the code.

The forked code can be downloaded here:

https://dl.dropbox.com/u/1614464/fof/fof-1.0.1.tar.gz

In the absence of a proper change log, here's the subversion commit log (so you can see what has been changed):

https://dl.dropbox.com/u/1614464/fof/svn_log_1.0.1.txt

I really should move this project (as in the code hosting and bug tracking) onto some kind of hosted service (right now I'm doing all the bug-tracking etc on my local machine). Maybe I'll do that sometime in the future too.

Monday, June 25, 2012

Poll mod 0.1.3

Minor bug fix for punBB poll mod.

Fixes issue with "double escaping". Poll options containing characters <, >, &, ' or " will now display correctly.

Mod is still secure against XSS to the best of my knowledge.

https://dl.dropbox.com/u/1614464/robs_poll_mod/robs_poll_mod_0.1.3.tar.gz

Fixes issue with "double escaping". Poll options containing characters <, >, &, ' or " will now display correctly.

Mod is still secure against XSS to the best of my knowledge.

https://dl.dropbox.com/u/1614464/robs_poll_mod/robs_poll_mod_0.1.3.tar.gz

Sunday, April 22, 2012

wget and echoproxy work again!

follow up from http://98percentidle.blogspot.com.au/2012/03/uwa-changes-its-echo360-configuration.html

UWA have removed the authentication screen when viewing lectures on echo360. Wget and echoproxy will work again (without me having to make any modifications, yay!)

UWA have removed the authentication screen when viewing lectures on echo360. Wget and echoproxy will work again (without me having to make any modifications, yay!)

Monday, April 9, 2012

Facebook link scrubber

A script to remove the tracking code from links posted to facebook (ie stops facebook detecting which of your friends' links you choose to click on).

Works in Chrome and Firefox+Greasemonkey.

http://dl.dropbox.com/u/1614464/fbls.user.js

Works in Chrome and Firefox+Greasemonkey.

http://dl.dropbox.com/u/1614464/fbls.user.js

Saturday, March 24, 2012

UWA changes its Echo360 configuration - no more wget, no more echo-proxy (for now)!

There was a lot of downtime for the Echo360 system this week. What have UWA's IT crew been busy implementing?

This

A login page, which comes up the first time you try to view any lectures. Hilariously, it refers to itself as a "security check". I presume that it sets some kind of cookie, which then gets sent back to the UWA server when a request is made for mediacontent.m4v. Because the thing is, the firefox extension will still work perfectly (you just need to log in at the above screen, after that, everything is as before)

The only minor problem is that the update has broken echo-proxy (since it doesn't currently have any facilities for performing the log-in). I'm also unable to use wget, for similar reasons (which is a shame, because wget made it easy to schedule my downloads for "off-peak" periods, which would have reduced UWA's server load).

In all honesty, it probably won't be too difficult to update echo-proxy to automatically get past the login page. It will take a little bit of time to investigate how UWA have implemented everything, and then a bit of time to sidestep it, but I'm pretty certain it can be done (it's rather difficult to block people from accessing content which they have permission to access, lol). Please be patient. In the meantime, you can use firefox without issue.

As for using wget, curl will probably be a good alternative. This shall require some investigation.

UPDATE: As it transpires, the system does indeed work by setting a cookie on the client side (called "ECP-HEMS-SESSION", contents indicates units which may be accessed, expires daily). So dear Echo360 admins/developers, please understand the following:

When your "Security Check" relies solely on setting client side data (ie a cookie), that is no security at all. Do you actually understand that I have complete control over the cookie that I send you? All you have done is create a trivially bypassed annoyance. And to implement this annoyance, you took the system down for several days. Well done, well done.

This

A login page, which comes up the first time you try to view any lectures. Hilariously, it refers to itself as a "security check". I presume that it sets some kind of cookie, which then gets sent back to the UWA server when a request is made for mediacontent.m4v. Because the thing is, the firefox extension will still work perfectly (you just need to log in at the above screen, after that, everything is as before)

Above: firefox extension, working, despite the "Security Check". Lecture downloaded successfully. Hahaha >:-)

The only minor problem is that the update has broken echo-proxy (since it doesn't currently have any facilities for performing the log-in). I'm also unable to use wget, for similar reasons (which is a shame, because wget made it easy to schedule my downloads for "off-peak" periods, which would have reduced UWA's server load).

In all honesty, it probably won't be too difficult to update echo-proxy to automatically get past the login page. It will take a little bit of time to investigate how UWA have implemented everything, and then a bit of time to sidestep it, but I'm pretty certain it can be done (it's rather difficult to block people from accessing content which they have permission to access, lol). Please be patient. In the meantime, you can use firefox without issue.

As for using wget, curl will probably be a good alternative. This shall require some investigation.

UPDATE: As it transpires, the system does indeed work by setting a cookie on the client side (called "ECP-HEMS-SESSION", contents indicates units which may be accessed, expires daily). So dear Echo360 admins/developers, please understand the following:

When your "Security Check" relies solely on setting client side data (ie a cookie), that is no security at all. Do you actually understand that I have complete control over the cookie that I send you? All you have done is create a trivially bypassed annoyance. And to implement this annoyance, you took the system down for several days. Well done, well done.

Friday, March 23, 2012

Why Echo360 Fails on Chrome

In firefox, lectures can be downloaded simply by requesting the file "mediacontent.m4v". Attempting to do the same in Chrome, however, gives us the following error:

Oh Noes!

If we actually look at the headers the server is sending us, the problem quickly becomes apparent:

The Content-Disposition Header is sent twice. Chrome conservatively interprets this as a potential response splitting attack.

As you can see from the above screenshot, UWA's Echo360 server erroneously sends the Content-Disposition header twice. Because of the non-standard response, Chrome will refuse the connection. Why refuse the connection? Well, from Chrome's perspective, the double header could be a symptom of a HTTP response splitting attack, so the connection is refused on security grounds. In actuality, there is no security problem; simply put, the developers of Echo360 don't know how to write a web server (other users of Echo360 have reported the same issue, eg UniSA).

Hence why it is necessary to use a proxy to download lectures when using Chrome (as described previously). Instead of directly requesting "mediacontent.m4v" through Chrome, we send the request through to a locally running proxy server. The proxy server will then download "mediacontent.m4v" from UWA's server, ignoring the double header (we can safely ignore it, because we know that the double header is the result of incompetence, not malice). The proxy server will then send the contents of "mediacontent.m4v" through to the browser, but will actually set the HTTP headers correctly.

Although the proxy server ignores duplicate headers, this does not create a security problem. The proxy server will only download data from UWA, where it is known that the duplicate headers are not a problem.

In any case the proxy server does not pass any headers through to the browser - it completely re-writes them.

It should be noted that there is no implication in the above that the software is completely invulnerable to all forms of response splitting (for example, an attacker could modify the contents of the downloaded file, and there is no way to detect that). The only claim I make is that it is safe to ignore the duplicate header (a specific type of response splitting) in the limited set of circumstances I've outlined (downloading lectures from UWA's Echo360 server using the proxy I provided). But realistically, you really shouldn't run into any security problems at all.

In any case the proxy server does not pass any headers through to the browser - it completely re-writes them.

It should be noted that there is no implication in the above that the software is completely invulnerable to all forms of response splitting (for example, an attacker could modify the contents of the downloaded file, and there is no way to detect that). The only claim I make is that it is safe to ignore the duplicate header (a specific type of response splitting) in the limited set of circumstances I've outlined (downloading lectures from UWA's Echo360 server using the proxy I provided). But realistically, you really shouldn't run into any security problems at all.

An echo-proxy build that actually works

In a previous post, I provided some software for downloading lectures from UWA's new Echo360 system. Due to duplicate header problems, downloading lectures on Chrome was quite difficult; it was necessary to install a small proxy server (written in java) to get things going.

I've only just realised that the proxy server build I provided doesn't actually run; If you try to do so, you'll get a "class not found" error message. Basically, I done goofed.

The problem is now fixed, and the build linked below should actually work.

http://dl.dropbox.com/u/1614464/echo_script/echo_proxy_0.1.1.jar

The source code, including the updated build script is here:

http://dl.dropbox.com/u/1614464/echo_script/echo-proxy_source_0.1.1.tar.gz

Instructions for set-up and installation are unchanged; follow the procedure outlined in the previous post.

The error was due to the way java resolves dependencies within jar files (or perhaps more correctly, the error is due to me not reading all the documentation properly).

When you run a compiled java program "theprogram" from the command line, which has some dependencies in the file "dependencies.jar", you can get everything to work by setting the classpath. ie

All well and good. But now suppose that you want to package theprogram into a jar. It is tempting to think that you could just set the classpath as before in the jar manifest

ie

Unfortunately, when you try to run jarfile.jar, you'll get an error. Essentially, you can't have dependency jars within jars. Which is really quite annoying. There are several ways to solve the problem; you can extract the contents of dependencies.jar, and then add the contents to jarfile.jar, for example. Another method is to write a custom class loader as part of your program. But neither of these are particularly good solutions: the first can lead to naming conflicts with complicated dependencies, while the second is unnecessarily complicated (particularly in a small project) and requires the program code to be modified before it is packaged.

Probably the best solution would be to actually fix the java runtime. In fact, this particular nuisance is one of the top 25 RFEs for java; it has been for the last ten years. Sadly, it seems Oracle doesn't really care. While the problem is only a small nuisance, with several available workarounds, it surely wouldn't be too hard to fix.

I've only just realised that the proxy server build I provided doesn't actually run; If you try to do so, you'll get a "class not found" error message. Basically, I done goofed.

The problem is now fixed, and the build linked below should actually work.

http://dl.dropbox.com/u/1614464/echo_script/echo_proxy_0.1.1.jar

The source code, including the updated build script is here:

http://dl.dropbox.com/u/1614464/echo_script/echo-proxy_source_0.1.1.tar.gz

Instructions for set-up and installation are unchanged; follow the procedure outlined in the previous post.

The error was due to the way java resolves dependencies within jar files (or perhaps more correctly, the error is due to me not reading all the documentation properly).

When you run a compiled java program "theprogram" from the command line, which has some dependencies in the file "dependencies.jar", you can get everything to work by setting the classpath. ie

java -cp .:dependencies.jar theprogram

All well and good. But now suppose that you want to package theprogram into a jar. It is tempting to think that you could just set the classpath as before in the jar manifest

ie

echo "Main-Class: theprogram" > manifest.txt

echo "Class-Path: . dependencies.jar" >> manifest.txt

echo "" >> manifest.txt

jar cfm jarfile.jar manifest.txt theprogram.class dependencies.jarUnfortunately, when you try to run jarfile.jar, you'll get an error. Essentially, you can't have dependency jars within jars. Which is really quite annoying. There are several ways to solve the problem; you can extract the contents of dependencies.jar, and then add the contents to jarfile.jar, for example. Another method is to write a custom class loader as part of your program. But neither of these are particularly good solutions: the first can lead to naming conflicts with complicated dependencies, while the second is unnecessarily complicated (particularly in a small project) and requires the program code to be modified before it is packaged.

Probably the best solution would be to actually fix the java runtime. In fact, this particular nuisance is one of the top 25 RFEs for java; it has been for the last ten years. Sadly, it seems Oracle doesn't really care. While the problem is only a small nuisance, with several available workarounds, it surely wouldn't be too hard to fix.

Friday, March 9, 2012

Firefox and Chrome Extensions for Downloading Lectures from Echo360

By now, we've probably all discovered UWA's new "Echo360" Lecture Capture System is a POS. By which I mean "Piece of Shit", and not "Public Open Space" / "Point of Sale".

In particular, most lectures are not available for downloading. Which is unfortunate, as it means having to watch lecture recordings at 1x speed and putting up with irritating "buffering" screens.

Fear not! I've created some browser extensions for Firefox and Chrome to allow the lectures to be downloaded. The Firefox extension is actually pretty trivial, but getting things running on Chrome is much more fiddly. I haven't done an enormous amount of testing, but everything seems to work, at least on the two machines I've tried it on. Hopefully someone else will find this stuff useful too.

For Firefox:

Click on the link below to install the firefox extension:

http://dl.dropbox.com/u/1614464/echo_script/echodownloader.xpi

Restart firefox; that's it! Now whenever you view a lecture, you'll be presented with a (rather ugly looking) link to download the lecture at the top of the screen.

I've only tested the extension on firefox 10 (Linux and OSX). YMMV, but it should run fine on firefox > 2. If you have NoScript installed, and try using Echo360 "normally", it may warn about a potential "clickjacking" attack. It's perfectly safe to ignore this warning (ie it's the extension that's "potentially clickjacking" you).

For Chrome:

Downloading the lectures on Chrome is quite a bit more difficult. The reasons for this are technical; basically, UWA's server sends a non-standard response when you try to download any lectures, and chrome freaks out about it.

I'll go into the details in another post; for now suffice it to say that a good way to get around this issue is to run a proxy server. This sounds more complicated than it actually is; from the user's perspective all you'll need to do is install and run one additional piece of software on your computer (in addition to a browser extension). I'll give the instructions on how to get things working first, before I discuss how it works, firewalls, security, etc.

First, click on the link below to install the browser extension:

http://dl.dropbox.com/u/1614464/echo_script/Echo_Downloader_chrome.user.js

Next, download the proxy server:

http://dl.dropbox.com/u/1614464/echo_script/echo_proxy.jar

Before you can download any lectures, you'll need to start the server. It's written in Java, so hopefully it'll run fine on whatever Operating System you use. For now, starting the server has to be done from the command line. This is not really as user friendly as I'd like, and I'll possibly look at packaging things better in future (subject to time/enthusiasm).

Anyway, to start the server, do the following

On Windows:

Select Start->run. Type "cmd" and press enter. At the command prompt, execute the following

Where obviously, you replace C:\Documents etc with the folder where you actually saved the code.

On OSX:

Run the "Terminal" application (you can do this by typing "Terminal" into spotlight). Run the following command

Where again, you replace Downloads/etc with the folder where you saved the code. If you want to stop the server, press Command-C.

On Linux:

I assume you know what you're doing.

Hopefully everything will work. You should now be able to download the lectures - just click the link at the top of the screen (see below).

Further comments on the proxy server

1. Starting the server on login

The server must be running before you can download lectures, but starting the server is a little annoying. It would be quite easy to set things up so that the server runs whenever you start your computer (so you could just set and forget). I'll possibly write a howto on doing that in another post (this one's starting to get long and unwieldy).

2. Security

The proxy server will only accept requests from the machine on which it is running (ie no remote requests), and will only download files from the domain prod.lcs.uwa.edu.au. This is for various security reasons; I'll explain the detail in another post. The point I'm trying to make is that although I hacked these extensions together quite quickly, and some of the code is a bit crap, it should be quite safe to run.

3. Firewalls

The proxy server listens on port 49152 (as this is an unreserved port). Why not use port 8080 or 80? Because I already have servers running on those ports, and I wrote this stuff for my own benefit. The proxy will also use port 8080 when it downloads the lecture from UWA's server. If you have a firewall running, and it complains about traffic on port 49152 or 8080, then there's nothing wrong - you can allow the traffic.

4. Source

If you'd like to download the source code for the proxy server (to modify/check I'm not sniffing your bank account details), you can get it here:

http://dl.dropbox.com/u/1614464/echo_script/echo-proxy_source_0.1.tar.gz

There's really not much to it, it's less than 100 lines of code. All the hard bits are done by Jetty.

Other browsers/platforms:

I originally developed the Firefox extension using Greasemonkey. If you already have Greasemonkey installed, you may as well just use this script

http://dl.dropbox.com/u/1614464/echo_script/Echo_Downloader_firefox.user.js

Rather than downloading and installing the extension.

If for some bizarre reason you still use Internet Explorer, I believe there's software available on the tubes that allows you to run Greasemonkey scripts in IE. Similar comments apply for Safari. Opera users (all 10 of you), should be able to run the Greasemonkey scripts out of the box. Here's the first result I got when googling how to do these things - it appears to be helpful.

http://techie-buzz.com/tips-and-tricks/greasemonkey-alternatives-for-ie-opera-and-safari.html

I have no idea whether everything will work correctly or not; my guess is probably not. You're welcome to try and get things running, but I have no intention to support browsers other than firefox and chrome.

Finally:

I may make some improvements, depending on how much time and/or motivation I have. Feedback is welcome, although it may be ignored. You're also welcome to modify/redistribute any of the code I've posted.

Any updates will be posted here. I'm currently looking at integrating the download links with the screen in LMS that shows the list of available lectures. In theory it should be very easy. Sadly, the Echo360 developers have decided that placing iframes within iframes within iframes and not giving any DOM elements an id is a good idea; this is making the job quite a bit more fiddly.

In particular, most lectures are not available for downloading. Which is unfortunate, as it means having to watch lecture recordings at 1x speed and putting up with irritating "buffering" screens.

Annoyingly, you still get the buffering screen even when you skip back to parts of the lecture that have already loaded. Truly the developers of Echo360 are wonderful and competent people.

Fear not! I've created some browser extensions for Firefox and Chrome to allow the lectures to be downloaded. The Firefox extension is actually pretty trivial, but getting things running on Chrome is much more fiddly. I haven't done an enormous amount of testing, but everything seems to work, at least on the two machines I've tried it on. Hopefully someone else will find this stuff useful too.

For Firefox:

Click on the link below to install the firefox extension:

http://dl.dropbox.com/u/1614464/echo_script/echodownloader.xpi

Restart firefox; that's it! Now whenever you view a lecture, you'll be presented with a (rather ugly looking) link to download the lecture at the top of the screen.

Echo Downloader on Firefox 10

I've only tested the extension on firefox 10 (Linux and OSX). YMMV, but it should run fine on firefox > 2. If you have NoScript installed, and try using Echo360 "normally", it may warn about a potential "clickjacking" attack. It's perfectly safe to ignore this warning (ie it's the extension that's "potentially clickjacking" you).

For Chrome:

Downloading the lectures on Chrome is quite a bit more difficult. The reasons for this are technical; basically, UWA's server sends a non-standard response when you try to download any lectures, and chrome freaks out about it.

I'll go into the details in another post; for now suffice it to say that a good way to get around this issue is to run a proxy server. This sounds more complicated than it actually is; from the user's perspective all you'll need to do is install and run one additional piece of software on your computer (in addition to a browser extension). I'll give the instructions on how to get things working first, before I discuss how it works, firewalls, security, etc.

First, click on the link below to install the browser extension:

http://dl.dropbox.com/u/1614464/echo_script/Echo_Downloader_chrome.user.js

Next, download the proxy server:

http://dl.dropbox.com/u/1614464/echo_script/echo_proxy.jar

Before you can download any lectures, you'll need to start the server. It's written in Java, so hopefully it'll run fine on whatever Operating System you use. For now, starting the server has to be done from the command line. This is not really as user friendly as I'd like, and I'll possibly look at packaging things better in future (subject to time/enthusiasm).

Anyway, to start the server, do the following

On Windows:

Select Start->run. Type "cmd" and press enter. At the command prompt, execute the following

java.exe -jar "C:\Documents and Settings\username\Downloads\echo_proxy.jar"

Where obviously, you replace C:\Documents etc with the folder where you actually saved the code.

On OSX:

Run the "Terminal" application (you can do this by typing "Terminal" into spotlight). Run the following command

java -jar Downloads/echo_proxy.jar

On Linux:

I assume you know what you're doing.

Hopefully everything will work. You should now be able to download the lectures - just click the link at the top of the screen (see below).

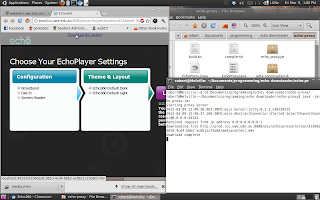

Echo Downloader running on Chromium, with aid of proxy server (note the URL in the status bar).

Further comments on the proxy server

1. Starting the server on login

The server must be running before you can download lectures, but starting the server is a little annoying. It would be quite easy to set things up so that the server runs whenever you start your computer (so you could just set and forget). I'll possibly write a howto on doing that in another post (this one's starting to get long and unwieldy).

2. Security

The proxy server will only accept requests from the machine on which it is running (ie no remote requests), and will only download files from the domain prod.lcs.uwa.edu.au. This is for various security reasons; I'll explain the detail in another post. The point I'm trying to make is that although I hacked these extensions together quite quickly, and some of the code is a bit crap, it should be quite safe to run.

3. Firewalls

The proxy server listens on port 49152 (as this is an unreserved port). Why not use port 8080 or 80? Because I already have servers running on those ports, and I wrote this stuff for my own benefit. The proxy will also use port 8080 when it downloads the lecture from UWA's server. If you have a firewall running, and it complains about traffic on port 49152 or 8080, then there's nothing wrong - you can allow the traffic.

4. Source

If you'd like to download the source code for the proxy server (to modify/check I'm not sniffing your bank account details), you can get it here:

http://dl.dropbox.com/u/1614464/echo_script/echo-proxy_source_0.1.tar.gz

There's really not much to it, it's less than 100 lines of code. All the hard bits are done by Jetty.

Other browsers/platforms:

I originally developed the Firefox extension using Greasemonkey. If you already have Greasemonkey installed, you may as well just use this script

http://dl.dropbox.com/u/1614464/echo_script/Echo_Downloader_firefox.user.js

Rather than downloading and installing the extension.

If for some bizarre reason you still use Internet Explorer, I believe there's software available on the tubes that allows you to run Greasemonkey scripts in IE. Similar comments apply for Safari. Opera users (all 10 of you), should be able to run the Greasemonkey scripts out of the box. Here's the first result I got when googling how to do these things - it appears to be helpful.

http://techie-buzz.com/tips-and-tricks/greasemonkey-alternatives-for-ie-opera-and-safari.html

I have no idea whether everything will work correctly or not; my guess is probably not. You're welcome to try and get things running, but I have no intention to support browsers other than firefox and chrome.

Finally:

I may make some improvements, depending on how much time and/or motivation I have. Feedback is welcome, although it may be ignored. You're also welcome to modify/redistribute any of the code I've posted.

Any updates will be posted here. I'm currently looking at integrating the download links with the screen in LMS that shows the list of available lectures. In theory it should be very easy. Sadly, the Echo360 developers have decided that placing iframes within iframes within iframes and not giving any DOM elements an id is a good idea; this is making the job quite a bit more fiddly.

Subscribe to:

Posts (Atom)